|

| May 16, 2023 | Volume 19 Issue 19 |

Designfax weekly eMagazine

Archives

Partners

Manufacturing Center

Product Spotlight

Modern Applications News

Metalworking Ideas For

Today's Job Shops

Tooling and Production

Strategies for large

metalworking plants

Almost any object can become a touch input device

A new technique for recording and analyzing surface-acoustic waves can enable nearly any object to act as a touch input device and power privacy-sensitive sensing systems

By Zachary Champion, University of Michigan College of Engineering

Couches, tables, coat sleeves, and more can turn into high-fidelity input devices for computers using a new sensing system developed at the University of Michigan (U-M ).

The system repurposes technology from new bone-conduction microphones, known as Voice Pickup Units (VPUs), which detect only those acoustic waves that travel along the surface of objects. It works in noisy environments, along odd geometries such as toys and arms, and on soft fabrics such as clothing and furniture.

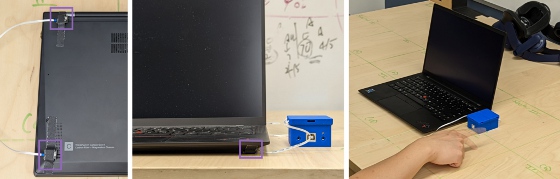

A sensing system called SAWSense takes advantage of acoustic waves traveling along the surface of an object to enable touch inputs to devices almost anyywhere. Here, a table is used to power a laptop's trackpad. [Image credit: Interactive Sensing and Computing Lab, University of Michigan]

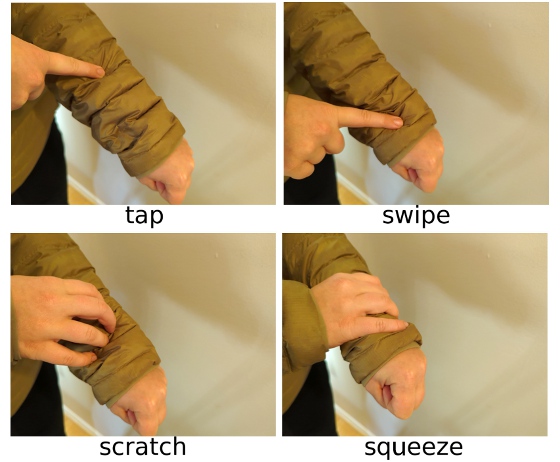

Called SAWSense, for the surface acoustic waves it relies on, the system recognizes different inputs, such as taps, scratches, and swipes, with 97% accuracy. In one demonstration, the team used a normal table to replace a laptop's trackpad.

"This technology will enable you to treat, for example, the whole surface of your body like an interactive surface," said Yasha Iravantchi, U-M doctoral candidate in computer science and engineering. "If you put the device on your wrist, you can do gestures on your own skin. We have preliminary findings that demonstrate this is entirely feasible."

Taps, swipes, and other gestures send acoustic waves along the surfaces of materials. The system then classifies these waves with machine learning to turn all touch into a robust set of inputs. The system was presented recently at the 2023 Conference on Human Factors in Computing Systems, where it received a best paper award.

As more objects continue to incorporate smart or connected technology, designers are faced with a number of challenges when trying to give them intuitive input mechanisms. This results in a lot of clunky incorporation of input methods such as touch screens, as well as mechanical and capacitive buttons, Iravantchi says. Touch screens may be too costly to enable gesture inputs across large surfaces like counters and refrigerators, while buttons only allow one kind of input at predefined locations.

SAWSense is able to recognize different types of touch input on soft and irregularly shaped objects, such as clothing. [Image credit: Interactive Sensing and Computing Lab, University of Michigan]

Past approaches to overcome these limitations have included the use of microphones and cameras for audio- and gesture-based inputs, but the authors say techniques like these have limited practicality in the real world.

"When there's a lot of background noise, or something comes between the user and the camera, audio and visual gesture inputs don't work well," Iravantchi said.

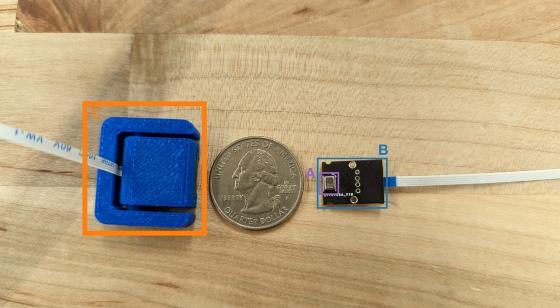

To overcome these limitations, the sensors powering SAWSense are housed in a hermetically sealed chamber that completely blocks even very loud ambient noise. The only entryway is through a mass-spring system that conducts the surface-acoustic waves inside the housing without ever coming in contact with sounds in the surrounding environment. When combined with the team's signal-processing software, which generates features from the data before feeding it into the machine learning model, the system can record and classify the events along an object's surface.

Voice PickUp units (VPUs) power SAWSense. Adapted from bone-conduction microphones, the small sensors (outlined in orange in this photo) are able to detect and record any event happening on a surface with a high amount of detail. [Image credit: Interactive Sensing and Computing Lab, University of Michigan]

"There are other ways you could detect vibrations or surface-acoustic waves, like piezo-electric sensors or accelerometers," said Alanson Sample, U-M associate professor of electrical engineering and computer science, "but they can't capture the broad range of frequencies that we need to tell the difference between a swipe and a scratch, for instance."

The high fidelity of the VPUs allows SAWSense to identify a wide range of activities on a surface beyond user touch events. For instance, a VPU on a kitchen countertop can detect chopping, stirring, blending, or whisking, as well as identify electronic devices in use such as a blender or microwave.

"VPUs do a good job of sensing activities and events happening in a well-defined area," Iravantchi said. "This allows the functionality that comes with a smart object without the privacy concerns of a standard microphone that senses the whole room, for example."

When multiple VPUs are used in combination, SAWSense could enable more specific and sensitive inputs, especially those that require a sense of space and distance like the keys on a keyboard or buttons on a remote.

In addition, the researchers are exploring the use of VPUs for medical sensing, including picking up delicate noises such as the sounds of joints and connective tissues as they move. The high-fidelity audio data VPUs provide could enable real-time analytics about a person's health, Sample says.

The team has applied for patent protection with the assistance of U-M Innovation Partnerships and is seeking partners to bring the technology to market.

Published May 2023

Rate this article

View our terms of use and privacy policy